I discovered that I failed to properly upload documentation for erlperf to hexdocs.pm. While I’m working to fix that (and going over the pain of edoc → ex_doc migration), here are answers to your questions:

- Does

erlperf:run/2 return the number of Queries Per Second?

Yes, if you requested a simple report, erlperf:run/2 returns the average of all collected (non-warmup) samples. Consider this configuration #{samples => 30, sample_duration => 1000, warmup => 3}. It tells erlperf to run for 33 seconds in total. Seconds, because sample_duration is set to 1000 (ms). First 3 samples are discarded (they are “warm-up samples”). For the remaining 30 samples, an average is returned.

You can request actual samples by setting report => extended. In the example below (started from rebar3 shell in the erlperf folder), I run rand:uniform(). for 5 samples, and get a list of 5 values (iterations per second):

(erlperf@ubuntu22)1> erlperf:run({rand, uniform, []}, #{samples => 5, report => extended}).

[15064123,15092843,15148119,15105712,15145791]

- How can I specify the duration for my test (ex. run it for 30 seconds)?

You can specify it this way: #{samples => 30}. Because default sample_duration is 1000 ms, the actual test should run for 30 seconds (and return average QPS).

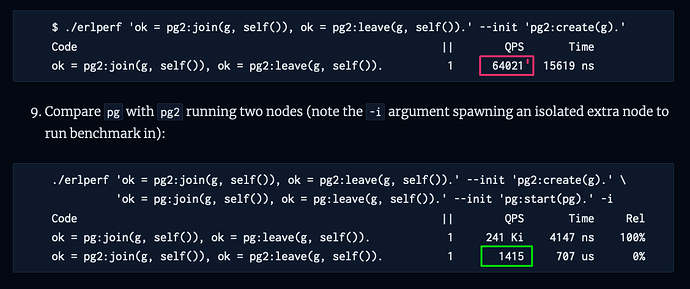

- When setting

concurrency => 1000, the test never finishes. What do you advice to set concurrency to?

I found a deficiency (*) in erlperf that caused it to work incorrectly under extreme scheduler utilisation (which is almost guaranteed under such a heavy load). I just fixed it, could you please update erlperf to version 2.1.0 or above? With that update, it will report correctly for any reasonable concurrency (including 1000 or 10000).

One more note, erlperf has built-in support for estimating how concurrent your code is. It’s called “concurrency estimation”, or “squeeze” mode. You can either use it from command-line (-q argument), or by using run/3 and specifying corresponding options. It will tell you how many concurrent processes saturate your code. See this blogpost for a few more hints.

- Could you please explain the

samples option and how it relates to duration?

erlperf runs your runner function in an endless loop, bumping a counter every time function is invoked. This counter grows monotonically. Every sample_duration (there is no duration option) value of the counter is recorded. When erlperf collect samples counter values, it considers test complete, calculates difference between recorded counter values, and returns average (you can also get the actual samples, and perform your own statistical operations, e.g. median). In some cases (e.g. to warm up the cache) it’s necessary to discard first few samples, this is done with warmup argument.

(*) Update erlperf to 2.1.0

When I tried running the example I suggested (writing to ETS table) with high concurrency (more than the number of CPU cores I had available), I noticed that benchmark results did not look right. Benchmark itself was taking longer than expected, and results fluctuated quite a bit. At first, I suspected that many workers running concurrently were stealing CPU time from the process that was taking samples. Which was, indeed, true, - but was easily solved by setting process_flag(priority, high) before calling erlperf:run/2. But it wasn’t enough to provide stable measurements: when VM experiences heavy lock contention, it won’t schedule high-prio processes either. Hence timer:sleep(1000) resulted in significantly larger delay, up to 2 seconds.

Fortunately, it is easy to detect this, and switch to busy wait loop (constantly checking monotonic clock value) when timer:sleep precision gets too low for benchmarking purposes. Essentially, that’s the main body of 2.1.0 update. This should only happen when lock contention is really high (otherwise ERTS scheduling should not skew too far from the expected sample duration), hence busy loop should not affect the result.

(**) to resolve the problem with ETS table concurrency, apply {read_concurrency, true}, {write_concurrency, auto} options to ets:new/2 call. In my tests it bumps QPS with high concurrency (at the expense of the total function latency):

Code || QPS Time

run(_, S) -> R = rand:mwc59(S), true = ets: 10 5173 Ki 1930 ns