Hello all, I’m doing a bit of POC work for a potential project and I’ve come up against something I don’t yet understand.

I’m running identical code on my 10-core M1 MacBook Pro and on a large 128-core/128GB RAM cloud VM (a GCP n2d-highcpu-128), and it reliably finishes all the work around 3-3.5x slower (wall-clock time) on the cloud VM than on the Mac, seemingly using very little of the available CPU. Sounds like there’s got to be a limit somewhere but I can’t find it.

I’ve run heavily-loaded Erlang websocket servers on similar machines before and seen all CPUs at high utilisation just using mostly vanilla config, so I was expecting the Linux box to crush it effortlessly.

I realise it could be my code causing this, or triggering some difference that causes this, but the identical code behaves really differently on each platform and I don’t know where to look.

Some more info, and where I’ve looked so far:

-

I’m running a single supervised

gen_serverprocess which has ahandle_call/3function that spawns a specified number of plain non-OTP processes. Each process does a simple calculation in its startup function, writes a single result record into aduplicate_bagETS table, and goes into areceiveloop(). The ETS table haswrite_concurrencyandread_concurrencyboth set totrue. The table writes are working fine - I have some fetch specs which check the data after everything is done, and it’s all present and correct. -

(I’ve tried doing it without the ETS writes; naturally that finishes much faster on both systems, but it’s still reliably more than 3x slower just to start the processes on the Linux VM than it is on the Macbook.)

-

The

handle_callfunction just doesspawn()inside a list comprehension withlists:seq(1,X)as the generator, and notes start and end times witherlang:monotonic_time(microseconds)before and after the comprehension. I realise this isn’t that realistic a scenario and there will be smarter ways to do this (this is just a POC), but regardless, it takes much longer to do the samespawn()s and writes on the VM than it does on the Mac:

100k: 974ms Mac, 2772ms Linux (2.85x faster on Mac)

500k: 4872ms Mac, 13836ms Linux (2.83x faster on Mac)

750k: 7.2s Mac, 20.8s Linux (2.88x faster on Mac)

1m: 10s Mac, 36s Linux (3.6x faster on Mac)

1.5m: 14.4s Mac, 44.5s Linux (3.08x faster on Mac)

-

Those numbers seem to suggest there’s maybe also something that happens between 750k and 1m which makes the difference widen. I checked

/proc/sys/kernel/threads-maxwhich shows1030974so it can’t be that, otherwise (I think!) the Linux BEAM would bomb out withsystem_limiterrors after ~1m. -

Here’s the

ulimitoutput:

igor@cloud-vm:~$ ulimit -a

real-time non-blocking time (microseconds, -R) unlimited

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 515487

max locked memory (kbytes, -l) 16498290

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 515487

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

-

I tried changing

ulimit -u 2000000butulimit: max user processes: cannot modify limit: Operation not permitted, and when I did it all asroot it changed the setting but the spawning/thread/core situation was the same. Same for

it changed the setting but the spawning/thread/core situation was the same. Same for ulimit -n open files(although I’m not doing anything in the filesystem, but unix). -

The only custom BEAM configuration is

ERL_FLAGS="+P 2000000"and the code is running insiderebar3 shellon both setups (Linux VM reportsErlang/OTP 25 [erts-13.0] [source] [64-bit] [smp:128:128] [ds:128:128:10] [async-threads:1] [jit:ns]). I’ve tried+K truejust to be sure even though there’s no file or socket usage, and fiddled with+Aand+Sto no avail. -

I’ve tried it in OTP 26.2.5 and 25.3.2.12 on my Mac, and I tried it in both 25.0.1/ESL and 27.0 on the VM (Debian 11, couldn’t find an R26 .deb, can try again if I can find a PPA or something for that). At first I thought it could be related to JIT improvements after R25, but then I got the same results on R27. (Also, R25 seems to do the work a bit faster than R26 on Mac(!).) I could put some of the difference down to running inside a VM, but these VM machines are used for native C++ apps distributed across all cores using GNU

parallel, and their multi-core performance on those hits 100% per core and blows the Mac out of the water, as expected, so I don’t know. -

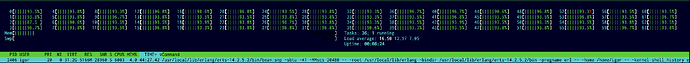

I ran the code while watching

vmstat 1on the VM. It showed mostly idle CPU with interrupts and context switching going crazy while doing the spawning, and the same thing happening when sending adiemessage to all the processes (which causes them to justokout of theirloop()):

r b swpd free buff cache si so bi bo in cs us sy id wa st

0 0 0 130103960 35472 754272 0 0 0 0 243 230 0 0 100 0 0

0 0 0 130104288 35472 754272 0 0 0 0 223 238 0 0 100 0 0

0 0 0 130104304 35472 754272 0 0 0 0 215 231 0 0 100 0 0

3 0 0 129778840 35472 754320 0 0 0 0 37213 64426 2 1 97 0 0 <- spawn()ing starts

5 0 0 129594880 35472 754320 0 0 0 0 45649 77408 2 1 97 0 0

4 0 0 129417056 35472 754320 0 0 0 0 45154 76444 3 1 97 0 0

5 0 0 129245264 35472 754320 0 0 0 0 45279 76551 3 1 96 0 0

5 0 0 129079640 35472 754320 0 0 0 0 44406 79255 3 1 96 0 0

5 0 0 128905848 35472 754320 0 0 0 0 42900 72504 2 1 97 0 0

5 0 0 128662480 35472 754320 0 0 0 0 43158 74755 3 1 96 0 0

4 0 0 128505176 35472 754320 0 0 0 0 43207 73535 2 1 97 0 0

8 0 0 128360384 35472 754320 0 0 0 0 42966 72490 2 1 97 0 0

[...]

3 0 0 125054408 35472 754776 0 0 0 0 44979 64148 2 1 97 0 0

0 0 0 125008624 35472 754880 0 0 0 0 23969 33793 1 1 98 0 0

0 0 0 125018416 35472 754880 0 0 0 0 473 453 0 0 100 0 0 <- spawn()ing finished

0 0 0 125018400 35472 754880 0 0 0 0 237 238 0 0 100 0 0

1 0 0 125018960 35472 754880 0 0 0 0 342 460 0 0 100 0 0

0 0 0 125019072 35472 754880 0 0 0 0 415 543 0 0 100 0 0

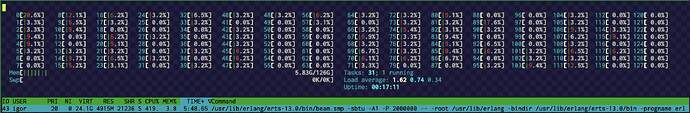

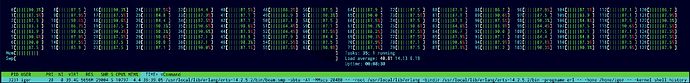

- I also noticed while running

htopduring the spawn that there was much sparser green/red (user/sys) activity in the CPU-core grid than I’d expect, and much smaller load (1.62)/task (31) numbers:

- In fact the total CPU usage of the

beam.smpprocess was only ever at maximum 450-500%. Again, these machines are often used to run many copies of single-threaded apps distributed across multiple cores, and I often see all cores running at 100% each, i.e. 12800% total utilisation. I realise that’s a different scenario, and the BEAM is a single process distributing work across multiple threads - but on the Mac it seems to just scale up automatically, I see all cores blazing for much shorter time, whereas on the Linux VM I see many idle cores and the work takes a lot longer overall.

So, lower than expected CPU utilisation on fewer cores than expected, plus high context switching, seems to suggest that the BEAM is doing way more work scheduling and allocating work to a smaller pool than it could be working with.

I must be either doing (/not doing) something in my code, or missing some Linux+Erlang config, that’s making that happen. I’m guessing it’s something to do with process/thread management/distribution, but I don’t know what else to look at to try and change or tune.

Any tips would be very welcome, thanks!